There are lots of creative folks out there, and one of my favorite sites is Evil Mad Scientist

Windell and Lenore are always doing something interesting, everything from cooking to electronics. They also sell kits and supplies for their projects at http://evilmadscience.com.

Long ago, I was impressed by their LED menorah, where they soldered the led’s directly to the pins of a little micro-controller. Later they came up with another holiday decoration which was a 18 segment alphanumeric display soldered directly to display messages one character at a time. I decided I wanted to do this, both as a project for the kids, and to make some specific message gifts for some friends and teachers of mine.

Makershed has a kit, but since I wanted to do my own messages anyway, the preprogrammed micro had no value. I did find it convenient to buy the displays and battery holders from EMSL. I got the ATTINY 2313’s from Digikey.

Here’s the main page describing the project, and here are the technical details.

I found the technical page useful for debugging my soldering when some of the segments didn’t light up as planned.

In order to change the messages you’ll also need a way of programming the ATTINY’s and I have a USB TinyISP from Adafruit.

You can also wire up an arduino as an ISP programmer, but I find the adafruit programmer to be cheap and very useful.

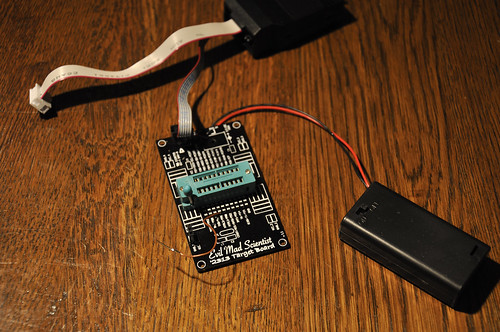

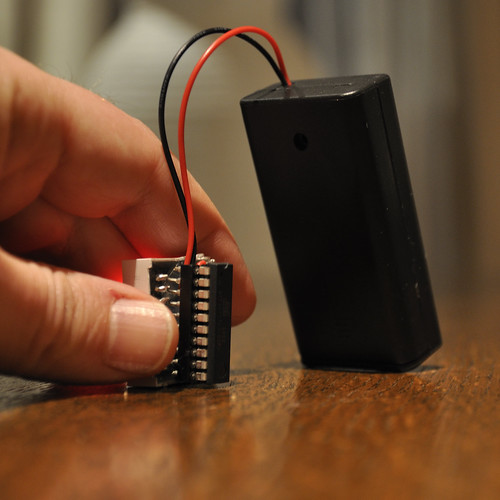

I also bought a mini-target board from http://evilmadscience.com/tinykitlist/112-tiny2313 including a new 4313 (twice the memory) and a ZIF socket that makes it really easy to remove the chip.

Here is the target board hooked up to the USBTinyISP

I would highly recommend buying enough parts for at least two Microreaderboards so you can make one with a socket. I did this (in fact I took advantage that EMSL gives you a price break for 5 displays), and even though I was sure I checked it, I noticed misspellings in one that I had already soldered to the display!

I found it difficult to solder a regular socket to the display (the pins are too short) but I had a wirewrap socket from the old days hanging around, and though it sticks out, it is great, with machined holes that make it easy to insert and remove the microprocessor.

Windell’s code is terrificly self documenting, and easy to modify. One thing I didn’t like though, was the fact that there was a RAM buffer used to hold the string being displayed. This means that you have to make sure your strings aren’t longer than 75 characters. You also have to count your strings. I did a minor hack that allowed all that to be calculated at compile time and to read the characters directly from flash memory. You can find my modified code here.

It’s fascinating just trying to read the words one character at a time. If you lose focus for an instant, you lose the word! What a great mindfulness practice. I did another minor hack supplementing the font with a few other characters to allow a spinning clock. You can find the code mods in a forum posting at EMSL’s forums here.

I’m really enjoying thinking about what I want to say in these, almost as much as making them. I also bought a larger display to make a giant one, and I’m thinking about running one off a coin cell to make a microreaderboard throwie.